Table of Contents

Beyond Prompting: Why Context Engineering Is Key to AI Success

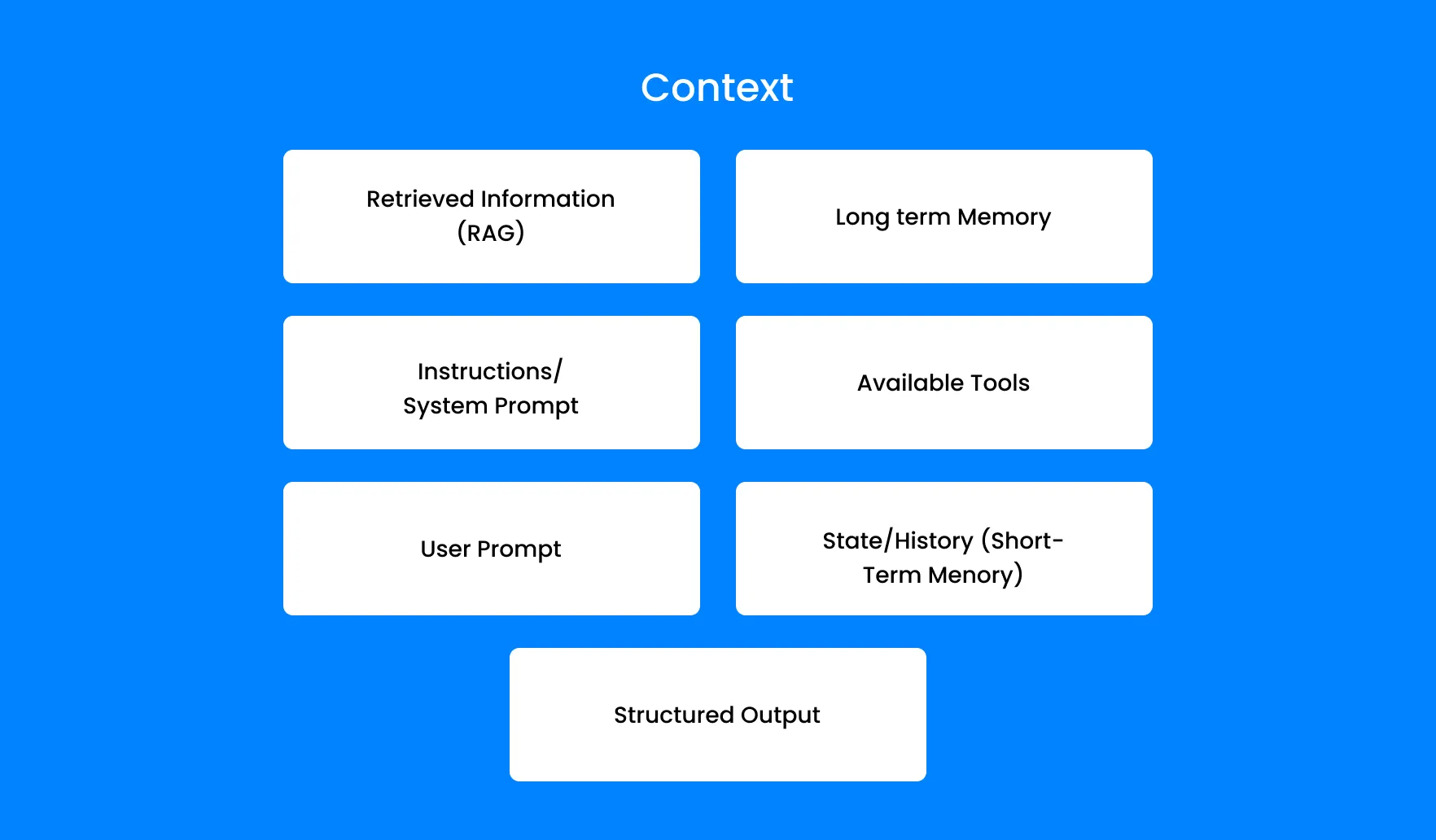

What Do We Mean by "Context"?

Before we dive into context engineering, it's important to rethink what "context" actually means in the world of AI.

Most people think of context as just the prompt — the one message they type into the chatbot. But in practice, it’s much bigger than that.

Context is everything the model sees and knows before it responds.

It includes the prompt, yes — but also the system instructions, previous messages in the thread, retrieved documents, memory, user data, tone, tools it can call, and even the format of the output you expect. It’s the full environment the model uses to make sense of the task.

When we talk about context engineering, we’re talking about shaping that environment deliberately — so the AI can do its job more accurately, reliably, and intelligently.

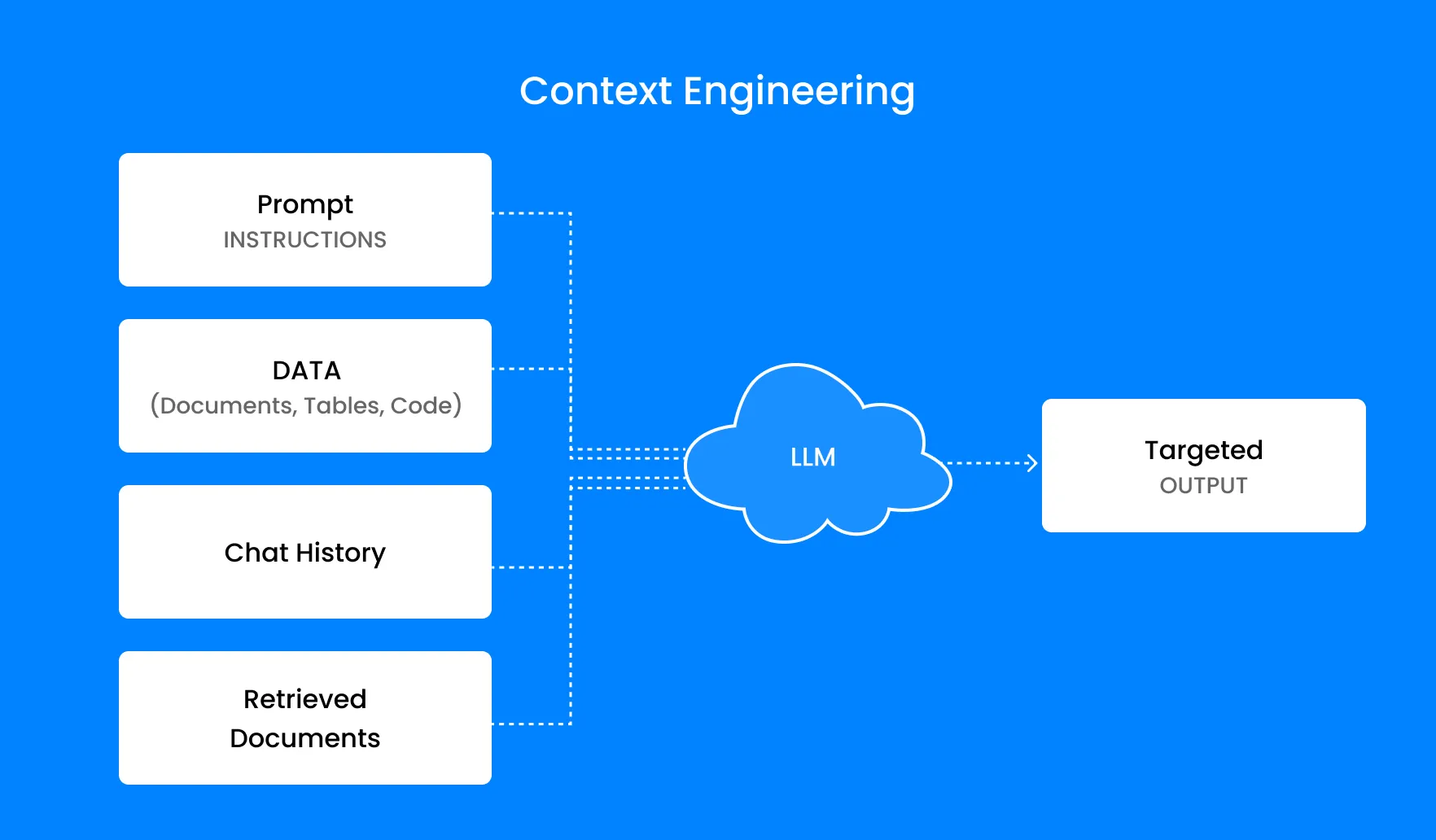

What is context engineering?

Context engineering is building dynamic systems to provide the right information and tools in the right format such that the LLM can accomplish the task.

Examples of context engineering

Some basic examples of good context engineering include:

- Tool use: Making sure that if an agent needs access to external information, it has tools that can access it. When tools return information, they are formatted in a way that is maximally digestable for LLMs

- Short term memory: If a conversation is going on for a while, creating a summary of the conversation and using that in the future.

- Long term memory: If a user has expressed preferences in a previous conversation, being able to fetch that information.

- Prompt Engineering: Instructions for how an agent should behave are clearly enumerated in the prompt.

- Retrieval: Fetching information dynamically and inserting it into the prompt before calling the LLM.

Use Cases

- Prompt Engineering:

→ Copywriting variations

→ “Write me a tweet like Naval”

→ One-shot code generation

→ Flashy demos

- Context Engineering:

→ LLM agents with memory

→ Customer support bots that don’t hallucinate

→ Multi-turn flows

→ Production systems that need predictability

Prompting vs. Contexting: What's the Real Difference?

At first glance, prompt engineering and context engineering might seem like two sides of the same coin. But in practice, they play very different roles in how we get meaningful results from AI.

Prompt engineering is about crafting the right instruction — asking the AI a clear, effective question. It’s focused, intentional, and often works well in controlled or one-off situations.

Context engineering, on the other hand, is about everything that surrounds that question. It’s the process of making sure the AI has the knowledge, history, tools, and structure it needs to actually answer well — especially in dynamic, real-world environments.

Think of it this way:

Prompting is asking the right question.

Contexting is making sure the AI is in the right situation to answer it.

A carefully written prompt might work great in a test — but fall apart in production if the model doesn’t know:

- Who the user is

- What was said earlier in the conversation

- What data is relevant to the task

- What tools are available to act on the answer

This is the gap that context engineering fills. It’s about designing systems that deliver the right context to the model — automatically and reliably — so you’re not stuck writing dozens of custom prompts for every scenario.

In short:

Prompting = asking questions well.

Contexting = designing the whole situation so the AI gives smarter, more useful answers.

Key Differences

| Prompting | Context Engineering |

| Quick & simple | Detailed & strategic |

| AI makes assumptions | AI works with clear purpose and structure |

| Results may be generic | Results are tailored, consistent, and on-brand |

| Best for casual or one-off tasks | Best for repeatable, professional, or complex workflows |

Prompt engineering Vs Context Engineering

Mindset:

Prompt Engineering is about crafting clear instructions; Context Engineering is about designing the entire flow and architecture of a model’s thought process.

Scope:

Prompt Engineering operates within a single input-output pair; Context Engineering handles everything the model sees — memory, history, tools, system prompts.

Repeatability:

Prompt Engineering can be hit-or-miss and often needs manual tweaks; Context Engineering is designed for consistency and reuse across many users and tasks.

Scalability:

Prompt Engineering starts to fall apart when scaled — more users = more edge cases; Context Engineering is built with scale in mind from the beginning.

Precision:

Prompt Engineering relies heavily on wordsmithing to get things “just right”; Context Engineering focuses on delivering the right inputs at the right time, reducing the burden on the prompt itself.

Debugging:

Engineering debugging is mostly rewording and guessing what went wrong; Context Engineering debugging involves inspecting the full context window, memory slots, and token flow.

Tools Involved:

Prompt Engineering can be done with nothing but ChatGPT or a prompt box; Context Engineering needs memory modules, RAG systems, API chaining, and more backend coordination.

Risk of Failure:

When Prompt Engineering fails, the output is weird or off-topic; when Context Engineering fails, the entire system might behave unpredictably — including forgetting goals or misusing tools.

Longevity:

Prompt Engineering is great for short tasks or bursts of creativity; Context Engineering supports long-running workflows and conversations with complex state.

Effort Type:

Prompt Engineering is like creative writing or copy-tweaking; Context Engineering is closer to systems design or software architecture for LLMs.

Imagine you’re building an AI assistant, and it receives this simple message:

"Hey, just checking if you’re around for a quick sync tomorrow."

The Functional (Basic) Demo

A basic agent sees only the prompt. The underlying code might be clean and technically correct — it calls the LLM and returns a result. But the response is bland, robotic, and disconnected from reality:

"Thank you for your message. Tomorrow works for me. May I ask what time you had in mind?"

This isn’t wrong — it’s just not helpful. The agent lacks awareness of your schedule, your relationship with the sender, or the ability to take meaningful action. It’s technically working, but it’s practically useless.

The Magical Agent

Now imagine the same request handled by an agent powered by rich, engineered context. The agent doesn’t just respond — it understands. It’s been fed everything it needs before it even touches the model:

- Your calendar (which shows you’re fully booked tomorrow)

- Your past emails with this person (revealing a friendly, casual tone)

- Your contact list (confirming this is a key partner)

- Tools it can call (like send_invite() or send_email())

Now the response looks like this:

"Hey Jim! Tomorrow’s packed on my end — back-to-back all day. Thursday AM is open if that works for you. Sent over an invite, let me know if it fits!"

This feels human. It's context-aware, proactive, and helpful — not because the model is smarter, but because the surrounding context was engineered with intention.

The Real Lesson

The magic isn’t in the model — it’s in the setup.

Strong AI agents aren’t just powered by prompts and APIs. They’re powered by context: what the model sees, remembers, references, and connects before generating output. Most AI agent failures aren’t about bad models — they’re about missing context.

This is why context engineering isn’t just a nice-to-have skill. It’s the difference between a demo and a product, between output that "works" and output that wows.

Context Engineering: The Hidden Layer of AI Success

Artificial Intelligence (AI) is transforming nearly every industry. From healthcare to finance, AI helps make smarter decisions faster. But behind the scenes, a less obvious yet vital part of AI's success often goes unnoticed—this is where context engineering comes in. Most focus on the model itself or the data fed into it. Yet, how an AI understands the situation around it can make all the difference. Without proper context, even the smartest AI can stumble. Understanding and applying effective context engineering unlocks the full power of AI. Let’s explore what this hidden layer is, why it matters, and how it drives success in AI systems.

Highlights :

Prompt engineering gets you the first good output.

Context engineering makes sure the 1,000th output is still good.

Is One a Subset of the Other?

Prompt Engineering is a subset of Context Engineering, not the other way around.

Think of it like this:

- Prompt Engineering focuses on what to say to the model at a moment in time.

- Context Engineering focuses on what the model knows when you say it — and why it should care.

How Context Engineering Supercharges Prompt Engineering

Example: A cloud data pipeline using Azure Event Hubs streams FASTQ files into Azure Data Lake Storage Gen2, partitioned by sample ID for efficient downstream processing.

When people talk about AI performance, they often obsess over writing the perfect prompt. But even the best-crafted prompt won’t deliver if it’s buried behind layers of noise, forgotten by the time the model gets to it, or overwhelmed by inconsistent inputs. That’s where context engineering steps in — not to replace prompting, but to elevate it.

Here’s how context engineering actively supports and strengthens prompt engineering:

- It protects your prompt. You might write the clearest, most precise instruction imaginable — but if it gets pushed deep into the token limit (say, after a long FAQ and a chunk of JSON), the model won’t even see it. Context engineering ensures that critical instructions stay front and center.

- It structures everything around the prompt. Memory, retrieval systems, and system instructions aren’t standalone features — they exist to serve the prompt. With context engineering, you’re deliberately shaping how these elements reinforce the prompt’s clarity and priority.

- It scales your prompts. Instead of manually rewriting instructions for every user or use case, you design modular, dynamic context. That way, prompts adapt to different users and tasks through structured inputs — not endless rewrites.

- It manages real-world constraints. Token limits, latency, compute costs — these are all realities of building production AI. Context engineering decides what matters most in each interaction, trimming excess and preserving essential meaning.

Practical Frameworks, Strategies, and Examples

So how does this all come together in the real world? Let’s look at a practical approach — the Context Pyramid Framework — and a real-world use case to illustrate its impact.

Context Pyramid Framework

This framework emphasizes that prompt engineering (at the top) only works when supported by strong lower layers — much like a house needs a foundation.

Example:

Financial Advisory Chatbot

Imagine you're building an AI-powered financial assistant. Here’s how context engineering plays out across the pyramid:

- Foundation Layer

- Integration Layer

- Interaction Layer

The bot is built on a finance-tuned language model — either through fine-tuning or domain-specific pretraining. It understands financial terminology and context deeply.

The assistant connects to real-time data sources like livestock market prices, user portfolios, or economic news feeds. This allows the AI to access fresh, relevant information at the time of the request.

At runtime, the prompt shapes the bot’s personality (“You are a trusted financial advisor”), injects user-specific data (like recent conversation or investment goals), and controls tone and format.

By thoughtfully engineering context at all three levels, the assistant can deliver personalized, up-to-date, and reliable financial advice. It doesn’t just respond — it reasons, adapts, and maintains continuity, all thanks to the scaffolding built around the prompt.

Advancements in Context-Aware AI

Deep learning models now focus more on understanding context better. Future AI can even anticipate needs, creating proactive responses that seem almost human.

As AI systems evolve, the limitations of simple prompt-based interactions are becoming increasingly evident. Modern AI doesn’t just need inputs—it needs context. This has fueled a wave of innovation in context-aware architectures that enable AI models to understand not just what users are saying, but why they’re saying it, what came before, and what’s relevant now.

Recent advancements have seen AI systems becoming more adept at leveraging historical interactions, user profiles, task-specific metadata, and even environmental cues to deliver smarter, more personalized results. Technologies such as retrieval-augmented generation (RAG), memory-augmented models, and fine-tuned multi-modal systems are helping bridge the gap between raw input and real-world relevance.

By integrating context into their core design—whether through dynamic memory modules, long-context transformers like Claude and GPT-4o, or enterprise knowledge grounding—these systems are shifting from reactive tools to proactive collaborators.

This progression marks a critical turning point in AI: from models that wait for instructions to models that anticipate needs and align with broader user intent.

The result? Dramatically improved performance in tasks like customer support, content generation, decision-making, and strategic planning —especially in enterprise environments where accuracy and nuance are non-negotiable.

Conclusion

Context engineering might be invisible, but it’s the secret sauce behind many successful AI systems. It helps turn raw data into meaningful insights, improving accuracy, relevance, and user satisfaction. Effective techniques involve gathering good data, modeling it smartly, and continuously updating the system. However, challenges like bias and privacy must be addressed with care. The future of AI depends on embedding strong context understanding—making systems smarter, safer, and more useful. Taking action now by prioritizing context engineering can set your AI projects apart. Don’t overlook this hidden layer—it’s the foundation of true AI success.

Read More

By Divya K

Cloud Engineer

Read other blogs

Your go-to resource for IT knowledge. Explore our blog for practical advice and industry updates.

Discover valuable insights and expert advice.

Uncover valuable insights and stay ahead of the curve by subscribing to our newsletter.

Download Our Latest Industry Report

To know more insights!